Convolutional Neural Networks (CNNs) represent a pivotal advancement in the realm of artificial intelligence, particularly for image recognition tasks.

Their unique architecture is designed to automatically and adaptively learn spatial hierarchies of features from images, making them exceptionally effective for visual data. Unlike traditional algorithms that often require extensive pre-processing, CNNs can learn directly from the raw pixel values, significantly simplifying the development pipeline for complex tasks in computer vision.

The inception of CNNs dates back to the 1980s, with landmark contributions such as the LeNet architecture, which laid the groundwork for character recognition. Over the years, developments like AlexNet, VGGNet, and ResNet have pushed the boundaries of what CNNs can achieve, redefining benchmarks across various image classification tasks. Their capacity to process large amounts of data using convolutional layers has facilitated the automation of many processes that were previously labor-intensive and time-consuming.

One of the primary motivations behind the rise of CNNs is their applicability across diverse fields, including healthcare, where they assist in diagnosing diseases through medical imaging, and autonomous vehicles, where they interpret visual surroundings to navigate safely. In social media, CNNs empower platforms to analyze images for content moderation and enhancement of user engagement. The distinct ability of CNNs to extract and recognize patterns makes them an integral technology in today’s deep learning landscape.

As the demand for intelligent systems continues to grow, understanding the core principles of Convolutional Neural Networks becomes increasingly vital. By exploring their evolution and applications, one can appreciate the profound impact these networks have made and will continue to make in various domains that rely on advanced image recognition capabilities.

Convolutional Neural Networks (CNNs) are a class of deep learning models particularly adept at processing grid-like data, such as images. At the core of CNN architecture are several fundamental components, each playing a crucial role in the network’s ability to learn and recognize patterns within visual data.

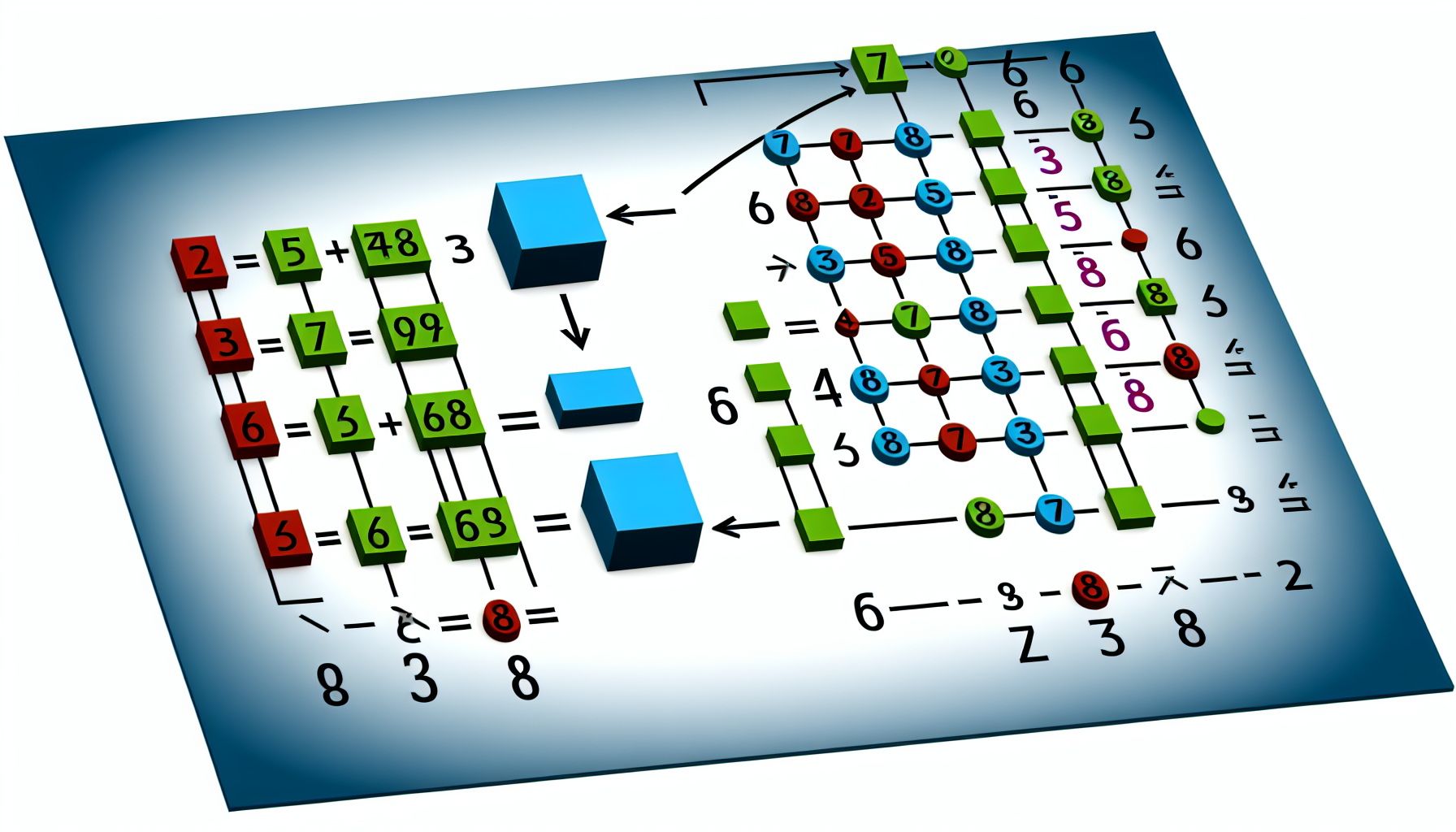

The first primary component is the convolutional layer. This layer applies a series of filters or kernels to the input image. Each filter scans across the image, performing a mathematical operation called convolution. This operation involves element-wise multiplication and summation of the filter and the overlapping region of the image. Through this process, the convolutional layer extracts essential features such as edges, textures, and shapes. The number of filters and their dimensions can significantly affect the model’s capacity to learn various levels of abstraction.

Next, we have the pooling layer, which typically follows a convolutional layer. The pooling operation reduces the spatial dimensions of the feature maps created by the convolutional layers. By applying techniques like Max Pooling or Average Pooling, the model can down-sample the feature maps while retaining the most critical information. This reduction not only helps decrease the computational load but also helps in extracting dominant features and reducing overfitting.

Following the sequence of convolutional and pooling layers, CNNs often incorporate fully connected layers. These layers serve to connect every neuron in one layer to each neuron in the following layer. This structure allows the network to make final classifications based on the features extracted throughout the preceding layers. The output from the final fully connected layer typically undergoes an activation function, such as Softmax, to produce probabilities for each class label.

Each of these components—convolutional layers, pooling layers, and fully connected layers—works in tandem to enable CNNs to learn hierarchical representations of the input images, facilitating accurate image recognition tasks. Diagrams illustrating these layers and their connections can significantly enhance comprehension of how CNNs function as a cohesive unit in the realm of image processing.

Convolution is a fundamental operation in the field of image processing and is particularly vital in the functioning of Convolutional Neural Networks (CNNs). It involves the application of a filter or kernel to an input image, where the filter slides or convolves across the image, computing dot products at each position to produce a feature map. This process allows the CNN to detect specific features, such as edges, textures, or shapes within an image.

The core idea behind convolution lies in the interaction between the input data and the filter. A filter typically consists of a small matrix of weights that are designed to respond to particular patterns in the input. As the filter moves over the image, it captures the way pixel values interact with the weights of the filter, producing an output that highlights areas of interest. For instance, a simple edge detection filter can help identify transitions between different colors or intensities, which are essential for recognizing objects within the image.

Different types of filters serve different purposes in feature extraction. For example, a Gaussian filter is often used for blurring images, while a Sobel filter helps in detecting gradients. In practice, multiple filters are applied within a single convolutional layer, allowing the network to learn a variety of features simultaneously. Each feature map generated from these filters contributes to the overall understanding of the image, enabling higher layers in the network to piece together complex information.

As a practical example, consider a 3×3 filter that is applied to a 5×5 pixel image. The convolution operation would start at the top-left corner of the image and overlap with the filter, calculating a dot product and producing a single pixel in the resulting feature map. This process continues until the filter has covered the entire image, resulting in a reduced-dimension output that retains essential information, showcasing how convolution effectively compresses spatial hierarchies while capturing important features.

Pooling layers are integral components of Convolutional Neural Networks (CNNs), designed to reduce the spatial dimensions of feature maps, thereby enhancing computational efficiency. By downsampling the data, pooling layers streamline the information processing in subsequent layers while helping to retain the most critical features of the input images. Two predominant types of pooling are max pooling and average pooling, each serving a specific purpose in the network’s architecture.

Max pooling operates by selecting the maximum value within a designated window or filter, effectively capturing the most prominent feature from that region. This approach aids in preserving the salient features of the image while ignoring minor variations or noise that may not contribute significantly to the high-level representation. Average pooling, on the other hand, computes the average of all values in the pooling window, providing a smoother representation that can be beneficial in certain scenarios where generalization is essential. Both methods contribute to the overarching goal of reducing the computational burden without compromising the performance of the network.

Downsampling through pooling layers offers several advantages, including reduced memory usage and improved processing speeds. By decreasing the number of parameters and computations within the CNN, pooling layers can foster a more efficient learning process. However, it is essential to acknowledge the trade-offs associated with pooling. While it simplifies the model, excessive downsampling may lead to the loss of spatial hierarchy and critical information, which could impact the network’s ability to recognize complex patterns in images.

Incorporating pooling layers effectively requires a balanced approach that considers the specific requirements of the task at hand. By judiciously selecting pooling strategies, practitioners can enhance the performance of CNNs, making them robust and effective for various image recognition tasks. The thoughtful integration of pooling mechanisms continues to be a pivotal aspect of developing efficient and powerful neural network architectures.

Training a Convolutional Neural Network (CNN) is a vital step in developing a model capable of effectively recognizing images. The learning process of a CNN involves multiple stages, primarily utilizing concepts like forward propagation, backpropagation, and loss functions. Understanding these components offers a glimpse into how CNNs acquire knowledge and refine their performance based on data input.

Initially, during the forward propagation phase, an input image is processed through various layers of the network. Each layer consists of multiple filters that convolve with the input data, generating feature maps. As the image progresses through the layers, it can extract increasingly complex features, from simple edges to intricate patterns. The output of the final layer is then passed through a softmax function, which provides probabilities corresponding to predefined classes, ultimately allowing the CNN to classify the image.

However, the process does not end with forward propagation. To enhance the model’s accuracy, the CNN employs backpropagation. This technique involves calculating the difference between the predicted output and the actual label using a loss function, which quantifies the prediction error. The CNN then updates the weights of the network accordingly, minimizing the loss over successive iterations through gradient descent or other optimization algorithms. This adjustment is crucial as it enables the model to learn from misclassifications and continuously improve its predictions.

The importance of quality training data is paramount. A diverse and representative dataset is fundamental for the CNN to generalize well to unseen images. Additionally, splitting the dataset into training, validation, and test sets allows for a thorough evaluation of the model’s performance and resilience against overfitting. The validation set helps tune hyperparameters, while the test set assesses the final model’s capability to predict accurately. By understanding these principles, practitioners can train their CNNs effectively for various image recognition tasks.

Convolutional Neural Networks (CNNs) have become a cornerstone in the field of image recognition, impacting various sectors with their advanced analytical capabilities. One of the most prevalent applications of CNNs is in facial recognition technologies, commonly utilized in security systems and social media platforms. For instance, Facebook employs CNN algorithms to automatically tag users in photographs by recognizing their facial features with remarkable accuracy. This application not only enhances user experience but also raises discussions on privacy concerns and ethical implications.

Another significant application is in object detection, which is integral to several industries, including retail and manufacturing. CNNs facilitate the automation of inventory management through real-time object recognition, enabling businesses to track stock levels with precision. A notable example is in automated checkout systems, where CNNs identify products as they are scanned, streamlining the purchasing process and reducing human error.

In the realm of healthcare, CNNs are revolutionizing medical imaging analysis. These networks are employed in diagnostic procedures such as radiology and pathology, where they assist in detecting anomalies in images like X-rays and MRIs. Studies have shown that CNNs can outperform traditional methods in identifying diseases, significantly reducing diagnosis time and improving patient outcomes. However, challenges remain, particularly regarding the need for large annotated datasets and the complexities of interpreting CNN outputs.

Additionally, self-driving cars rely heavily on CNNs for image processing tasks like lane detection and obstacle identification. Companies like Tesla and Waymo leverage CNN technology to interpret the vehicle’s surroundings, ensuring safety and efficiency in navigation. Despite their groundbreaking applications, the reliability of CNNs in unpredictable environments continues to be an area of active research, highlighting the balance between leveraging advanced technology and addressing its limitations.

Implementing Convolutional Neural Networks (CNNs) successfully requires thoughtful consideration of various factors such as data preparation, model architecture, hyperparameter tuning, and performance evaluation. To begin, data preparation is crucial; it involves preprocessing your image dataset to enhance the performance of your CNN. Common techniques include resizing images to a consistent dimension, normalizing pixel values to a range between 0 and 1, and augmenting data to increase variability and reduce overfitting. Utilizing methods like rotation, flipping, and scaling within the augmentation process can significantly benefit the model’s generalization capability.

Next, selecting the appropriate model architecture is essential. Several established architectures, such as VGGNet, ResNet, and Inception, serve as excellent starting points for image recognition tasks. Each architecture has its unique strengths concerning depth, computational efficiency, or performance on specific datasets. It is advisable to experiment with pre-trained models, especially when limited data availability might constrain the training of a model from scratch. Transfer learning can leverage existing features from these widely used architectures to improve the outcomes of your project.

Hyperparameter tuning is another critical aspect of CNN implementation. This process involves fine-tuning parameters like learning rate, batch size, and the number of epochs to optimize the model’s performance. Utilizing techniques such as grid search or random search can help in systematically identifying the ideal hyperparameters that achieve the best results on your validation dataset.

Performance evaluation should not be overlooked when implementing CNNs. Metrics such as accuracy, precision, recall, and F1-score provide valuable insights into how well the model performs. Applying tools such as TensorBoard for TensorFlow or visualization libraries for PyTorch can help monitor performance during training and validation phases.

Finally, for those seeking to delve deeper into CNN implementation, both TensorFlow and PyTorch offer extensive documentation and community forums. Online courses and tutorials can guide users in mastering these frameworks, enhancing their skills in developing advanced image recognition models.

The field of Convolutional Neural Networks (CNNs) is evolving rapidly, and future trends show significant promise in enhancing the capabilities of image recognition technologies. One of the most notable advancements is transfer learning, which allows models that have been pre-trained on large datasets to be fine-tuned for specific tasks with minimal data. This method not only accelerates the training process but also improves accuracy in scenarios with limited labeled data, making it particularly valuable in fields like medical imaging and autonomous driving.

Another exciting development involves generative adversarial networks (GANs). GANs consist of two neural networks, a generator and a discriminator, that compete against each other to create new, synthetic instances of data. This approach can be utilized in image recognition to augment training datasets, facilitating improved performance by exposing CNNs to a broader range of potential variations in the input data. Furthermore, GANs can assist in creating realistic simulations for training purposes, thereby enhancing the robustness of the models.

As the application of CNNs in image recognition expands, ethical considerations are increasingly coming to the forefront. Issues such as privacy, bias in AI algorithms, and the potential for misuse of image recognition technologies call for rigorous oversight and adherence to ethical standards. Consequently, there is a growing emphasis on developing transparent and accountable AI systems that prioritize fairness and integrity in decision-making processes.

As the landscape of CNNs and image recognition continues to evolve, numerous career opportunities are arising in these domains. Professionals with expertise in CNN architectures, machine learning, and ethical AI practices will be in high demand as organizations seek to implement cutting-edge image recognition solutions responsibly and effectively.

Convolutional Neural Networks (CNNs) have undoubtedly become a cornerstone of modern technology, particularly in the field of image recognition. As we have explored throughout this blog post, CNNs are specifically designed to process and analyze visual data, excelling at tasks such as object detection, facial recognition, and image segmentation. Their architecture, which mimics the way humans perceive visual stimuli, has led to breakthroughs in various applications ranging from healthcare to autonomous vehicles.

The significance of CNNs extends beyond mere functionality; they have transformed industries by providing more accurate and efficient means of image processing. For instance, in medical imaging, CNNs are utilized to detect anomalies in X-rays and MRIs, providing doctors with reliable tools for diagnosis. Similarly, in the realm of security, facial recognition systems powered by CNNs have enhanced surveillance measures, although they also raise critical ethical considerations that society must address.

Moreover, CNNs have empowered creative fields, enabling innovations in art and design. Artists and designers leverage these networks to generate new forms of media, blurring the lines between human creativity and machine learning. This intersection of technology and artistry exemplifies the profound impact of CNNs in our daily lives, leading to advancements and challenges that require careful navigation.

As we look toward the future, it is clear that CNNs will continue to play a pivotal role in shaping technological advancements. To fully appreciate their potential and complexities, individuals are encouraged to delve deeper into the subject. Exploring educational courses, engaging in practical projects, or participating in community discussions can greatly enhance one’s understanding of CNNs. By doing so, we can better harness their capabilities while also being mindful of the implications they bring to society.

Looking to advertise, promote your brand, or explore partnership opportunities?

Reach out to us at

[email protected]

Chose where you want to study, and we will let you know with more updates.